Collaboration Opportunity: Investigating the Effectiveness of a Bias Detector in Educational Discourse (Phase 1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

tl:dr We are looking to partner with educators and researchers to test the effectiveness of a framework/tool to detect non-neutrality and expressions of unconscious bias in online academic discourse. This solution has the potential to reduce non-neutral and unconsciously biased language and improve students’ sense of inclusivity, mental health, and overall well-being. If successful, millions of students who use Canvas LMS could see an improvement in their experience.

Background

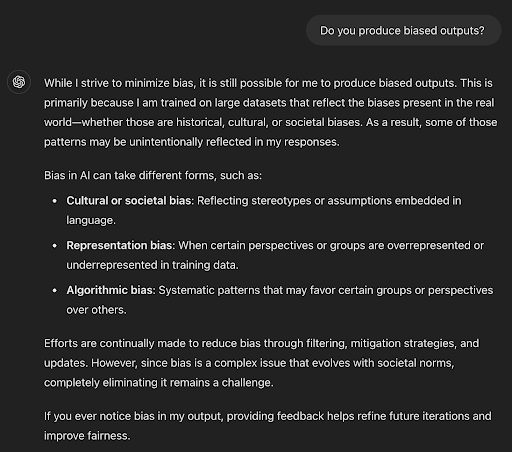

It’s no secret that biases are out there.

Language is biased; just look to our LLMs for the evidence. Studies have shown that they can unknowingly perpetuate and even amplify biases present in training data that reflect our stereotypes and prejudices (Bolukbasi et al., 2016; Caliskan et al., 2017). As educators begin to embrace AI and leverage it in their day-to-day, it is important to be aware that these biases exist (Ouyang, et al., 2023; U.S. Department of Education, n.d.). Further, an essential competency for today’s educators is understanding how their language choices could inadvertently express bias and negatively impact their students’ well-being.

Expressions of unconscious bias can reinforce harmful stereotypes and trigger stereotype threat, where a person’s fear of confirming a negative stereotype about their social group leads them to inadvertently confirm it (Steele & Aronson, 1995). Stereotype threat has been tied to a variety of negative outcomes, including the following:

- academic performance

- psychological well-being

- cognitive performance

- self-regulation

- emotional responses

- eroded sense of belonging

(Steele, 1997; Murphy et al., 2007; Schmader & Forbes, 2008; Walton & Cohen, 2007).

Instructors hold an authority that makes their choice of words particularly impactful. If there is unintentional use of biased language, despite the best of intentions, it can reinforce harmful stereotypes, contribute to stereotype threat, and create a less inclusive environment (Lorenz, 2021; Gordon, 2021). Students can internalize these biases, seeing them as sanctioned by someone in a position of power.

Feedback, grading, and classroom interactions are just a few areas that can be especially impactful to learning. And instructor feedback has been shown to affect a student's self-perception and academic motivation (Torres, et al., 2020). Whereas subtle biases in everyday communication, like favoring certain students or using gendered or racial stereotypes, can harm the classroom dynamic, affecting marginalized students' sense of belonging (Steele & Aronson, 1995).

Research has shown that efforts to neutralize subjectivity bias are possible and can detect framing bias (subjective words like best, riot, or exposed), epistemological bias (presuppositions or hidden assumptions within an interpretation), and demographic bias (presuppositions about particular genders, races, etc.; Pryzant et al., 2020). Results support that such a model is capable of providing useful suggestions for how to reduce these subjective biases in real-world texts (Pryzant et al., 2020). Further work has been done with Wikipedia’s neutrality corpus and has been shown to produce unwhelming NPOV detection, yet generating neutral point of view (NPOV) suggestions were more successful and these suggestions were preferred over human edit suggestions (Ashkinaze et al., 2024).

Approach & Purpose

We want to alleviate the issues associated with expressions of unconscious bias and have been developing a tool that flags biased language in online academic discourse. We’ve chosen an approach that builds on the work of Ashkinaze et al. (2024), to create a first-draft edit suggestor in educational contexts. While this large language model (LLM), as noted in Ashkinaze et al. (2024), performed poorly in bias detection it did well at generation, which is ideal for our purposes—a tool to suggest potential edits for flagged text. Our goal is to create a strong foundation for a future tool that helps educators recognize their use of non-neutral words and expressions of unconscious bias.

To test our solution’s applicability and usefulness as a first-draft edit suggestor in an educational context we need real education data. In this phase of the research, the focus would be on evaluating the performance of this newly developed bias detection solution using course data where we’ve been granted express permission to do so. A combination of quantitative (accuracy, precision, recall) and qualitative analyses (error analysis, context evaluation) would be used and the research seeks to answer the following key questions during Phase 1:

- How accurately does the solution detect non-neutral or biased language across different types of texts?

- Academic discourse

- Instructional content

- Instructor feedback

- Does the solution offer acceptable suggestions to correct overt and subtle forms of non-neutral and biased language?

- Does it generalize well across multiple contexts?

- Courses and Teachers

- Academic disciplines

- Institutions

- Regions

By working with educators and real data, we believe the development of this solution stands a greater chance of success. Upon validation of the solution’s performance in Phase 1, subsequent phases would focus on integrating the solution into a tool for educators to help them reduce biased language and improve classroom inclusivity.

Phase 2 (subject to the success of Phase 1) would involve field-testing the solution in a bias detection tool that would be used by educators. Teachers would be divided into experimental and control groups, and the tool's impact would be measured through language analysis, teacher and student surveys, and interviews.

Recruitment

We are seeking permission to analyze course materials, specifically instructional content and instructor feedback, within Canvas LMS. We are looking for a diverse set of course materials from different disciplines and institutions to help assess the solution’s performance. Because this solution analyzes real course materials, we see obtaining informed consent from instructors and institutions as essential. All stakeholders must be aware of how their data (including instructional content and feedback) will be used for these testing purposes.

If interested, feel free to complete this form, and we will reach out to you: https://Instructure.qualtrics.com/jfe/form/SV_1NfnjcjELRSdCrI

NOTE: Student data will be absent from this analysis. All data used to train and test this solution will be drawn from instructor exchanges (those consenting to participate); no student-generated discourse, such as discussion forums or message replies, would be used.

Importance and Impact

This study’s value lies in its potential. It seeks to fill a research gap and, in an effort to reduce bias, could offer a scalable solution for millions of educators.

- Phase 1 would contribute to the larger body of work suggesting that AI tools can enhance fairness in learning experiences. Existing literature shows potential for AI in recognizing and addressing bias, but there is a lack of large-scale studies investigating whether bias detection tools can mitigate stereotype threat in educational environments (Brookings, 2024; IntechOpen, 2024).

- Phase 2 would fill this gap. There is a well-documented connection between biased language, stereotype threat, and negative student outcomes, especially for marginalized groups (Steele, 1997; Spencer et al., 1999). Testing the adoption and effectiveness of a (to-be-built) bias detection tool would provide evidence for the efficacy of this solution. It would also assist educators in avoiding the unintentional use of biased language with their students, ultimately improving inclusivity in the classroom and student performance.

If successful, this research would provide a sound basis for the continued development of this solution and contribute to positive changes in student outcomes. This research could help teachers reduce their use of non-neutral/biased language over time, leading to more inclusive communication in their courses. In the long run, students in courses where a tool like this is used are anticipated to feel more included and have a greater sense of belonging compared to students in classes without such a solution.

As we see AI being rapidly integrated into education, creating solutions for the greater good to support student success—especially in terms of inclusivity and emotional well-being—has never been more important.

References

Anderson, J. (Host). (2020, October 22). Unconscious bias in schools [Audio podcast episode]. In Harvard EdCast. Harvard Graduate School of Education. https://www.gse.harvard.edu/news/20/10/harvard-edcast-unconscious-bias-schools

Ashkinaze, J., Guan, R., Kurek, L., Adar, E., Budak, C., & Gilbert, E. (2024). Seeing Like an AI: How LLMs Apply (and Misapply) Wikipedia Neutrality Norms. arXiv preprint arXiv:2407.04183. https://arxiv.org/pdf/2407.04183

Bolukbasi, T., Chang, K. W., Zou, J. Y., Saligrama, V., & Kalai, A. T. (2016). Man is to computer programmer as woman is to homemaker? Debiasing word embeddings. Proceedings of the 30th International Conference on Neural Information Processing Systems, 4349–4357. https://proceedings.neurips.cc/paper/2016/file/a486cd07e4ac3d270571622f4f316ec5-Paper.pdf

Brookings Institution. (2024). Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. Brookings. https://www.brookings.edu/research/algorithmic-bias-detection-and-mitigation/

Caliskan, A., Bryson, J. J., & Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science, 356(6334), 183–186. https://doi.org/10.1126/science.aal4230

Center for Teaching Excellence. (2024). Addressing bias in AI. University of Kansas - Center for Teaching Excellence. https://cte.ku.edu/addressing-bias-ai

Chin, M. J., Quinn, D. M., Dhaliwal, T. K., & Lovison, V. S. (2020). Bias in the air: A nationwide exploration of teachers’ implicit racial attitudes, aggregate bias, and student outcomes. Educational Researcher, 49(8), 566-578. https://doi.org/10.3102/0013189X20937240

Gordon, A. D. (2021). Better than our biases: Using psychological research to inform our approach to inclusive, effective feedback. Clinical L. Rev., 27, 195. https://www.law.nyu.edu/sites/default/files/Anne%20Gordon%20-%20Better%20than%20Our%20Biases.pdf

IntechOpen. (2024). AI for equity: Unpacking potential human bias in decision making in higher education. IntechOpen. https://www.intechopen.com/chapters/76183

Lorenz, G. (2021). Subtle discrimination: do stereotypes among teachers trigger bias in their expectations and widen ethnic achievement gaps?. Social Psychology of Education, 24(2), 537-571. https://link.springer.com/content/pdf/10.1007/s11218-021-09615-0.pdf

Murphy, M. C., Steele, C. M., & Gross, J. J. (2007). Signaling threat: How situational cues affect women in math, science, and engineering settings. Psychological Science, 18(10), 879-885. https://doi.org/10.1111/j.1467-9280.2007.01995.x

Ouyang, S., Wang, S., Liu, Y., Zhong, M., Jiao, Y., Iter, D., Pryzant, R., Zhu, C., Ji, H., & Han, J. (2023). The shifted and the overlooked: A task-oriented investigation of user-GPT interactions. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics. https://aclanthology.org/2023.emnlp-main.146.pdf

Pryzant, R., Martinez, R. D., Dass, N., Kurohashi, S., Jurafsky, D., & Yang, D. (2020, April). Automatically neutralizing subjective bias in text. In Proceedings of the aaai conference on artificial intelligence (Vol. 34, No. 01, pp. 480-489). https://arxiv.org/pdf/1911.09709

Quinn, D. M. (2020). Racial bias in grading: Evidence from teacher-student relationships in higher education. Educational Evaluation and Policy Analysis, 42(3), 444-467. https://doi.org/10.3102/0162373720942453

Schmader, T., Johns, M., & Forbes, C. (2008). An integrated process model of stereotype threat effects on performance. Psychological Review, 115(2), 336-356. https://doi.org/10.1037/0033-295X.115.2.336

Spencer, S. J., Steele, C. M., & Quinn, D. M. (1999). Stereotype threat and women’s math performance. Journal of Experimental Social Psychology, 35(1), 4-28. https://doi.org/10.1006/jesp.1998.1373

Steele, C. M., & Aronson, J. (1995). Stereotype threat and the intellectual test performance of African Americans. Journal of Personality and Social Psychology, 69(5), 797-811. https://doi.org/10.1037/0022-3514.69.5.797

Torres, J. T., Strong, Z. H., & Adesope, O. O. (2020). Reflection through assessment: A systematic narrative review of teacher feedback and student self-perception. Studies in Educational Evaluation, 64, 100814. https://www.academia.edu/download/111891842/j.stueduc.2019.10081420240227-1-jjna9r.pdf

U.S. Department of Education. (n.d.). AI Inventory. Office of the Chief Information Officer. Retrieved September 12, 2024, from https://www2.ed.gov/about/offices/list/ocio/technology/ai-inventory/index.html

Walton, G. M., & Cohen, G. L. (2007). A question of belonging: Race, social fit, and achievement. Journal of Personality and Social Psychology, 92(1), 82-96. https://doi.org/10.1037/0022-3514.92.1.82

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.