Welcome to the world of Canvas Data 2 inconsistency.

I am making some assumptions here, as your post is not completely clear to me. If I understand correctly,

- You are using the DAP client to maintain a set of tables in Postgres

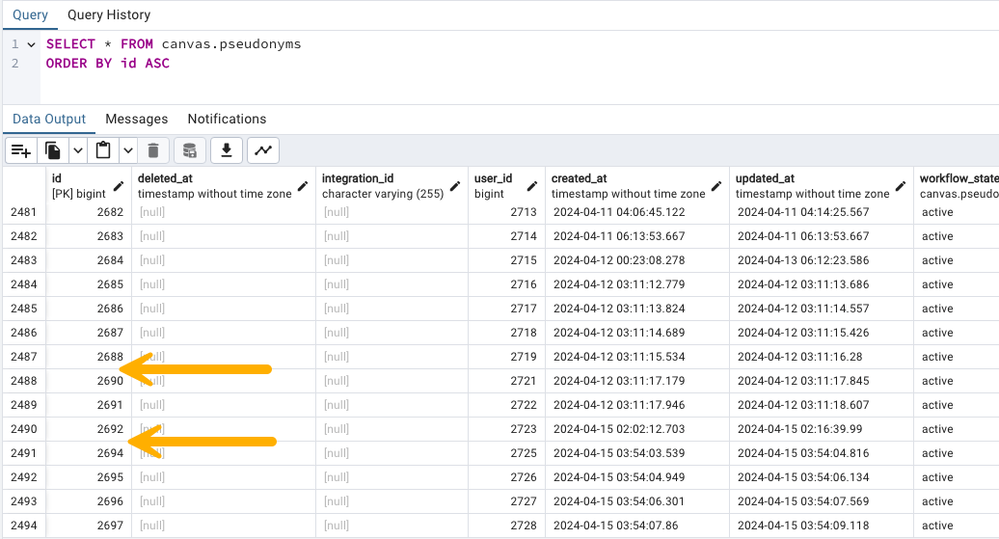

- After a recent run of the client (which would use an incremental update), you have noticed missing entries in Pseudonyms

- (My assumption from what you have written) - You have run a snapshot query against CD2 using the CLI or a separate client, not updating your database, and this has shown the entries present.

My initial questions with this would be:

- Have you tried another incremental update via the DAP client, and has that fetched the missing entries?

- When was the last time you ran the update prior to the one where you found missing items?

During the Beta phase of Canvas Data 2, I had long discussions about the lack of referential integrity in Canvas Data 2 - where things like this could occur, where users existed, but the pseudonyms did not. Instructure have classified the system has providing "eventual consistency" in that eventually the records should be present and consistent, but I have begged to differ and consider it to be "continually inconsistent".

I currently have tickets outstanding for missing data from CD2 - but it is probably not caused by this, but by Canvas errors during updates that cause some records to be left in inconsistent states such that the streaming logs that CD2 relies on do not reflect actual data - that is a completely different world of pain.

What I think is happening here, is a result of the way data is streamed to CD2, and the way the queries work.

In order to save space, and make the various queries more efficient within the constraints of the design choices made, only the most recent version of every record is stored. If a record is updated multiple times in a short space of time, only the most recent state will be retained (or ever present) in CD2. Each record is timestamped with the time that record is committed into CD2 - which is not the time of the actual update. There is no guaranteed timing of these streamed updates, and different tables can be update at different times - so you may have newer values in users, but the pseudonyms have not been update at that point.

Because there is only one version of each record, and the timestamp only reflects when it was persisted into CD2, it is not possible to generate a set of data consistent across tables at any given point in time. The only thing the timestamps can be used for, is to try and incrementally fetch data such that all records eventually get updated.

I don't have any knowledge of how locking on update or read is performed when accessing Canvas Data 2. I am guessing (and this is only a guess), that the updating of data into CD2 is done on a transactional basis per table, and that all updates for a given timestamp are committed at the same time, but this may not be the case, and updates may be committed on a more frequent basis, potentially record by record. I am also guessing that the reads (be it snapshot or incremental) do not lock at all, and are effectively dirty reads of whatever data is available. To provide consistent sets, be it for snapshot or incremental, the queries return the "until" parameter, which gives the starting point for the next incremental update.

What I am guessing, is that to provide the best approximation of consistency, is that at the start of a query, the maximum current timestamp is determined, and used to return the "until". All records for the table are then fetched, and any greater than the "from" (for incremental queries, or no lower bound for snapshots) and less than or equal to the "until" are returned. I believe this is a realistic assumption, as the query supports supply of the "until" parameter, which is not recommend, but can be used to effectively paginate updates, so the underlying processing obviously uses it.

If there is not full locking on updates and reads (which I highly doubt), the possibility exists (and this is what I suspect may have happened here) is that the following scenario is in play:

- A record Z has been first created at time t1 in Canvas

- At timestamp t2, record Z is created in Canvas Data 2 (which is later than the last incremental query done when the highest timestamp was t0)

- At time t3, record is updated in Canvas

- At time t4, an incremental query is requested against the table. At this point in time, the maximum timestamp on the table is t2

- The extract query commences extracting records with timestamps greater than t0 and less then t2

- At timestamp t5 (greater than t4 by a very small amount), record Z is updated in Canvas Data 2, and the timestamp is changed to t5

- At time t6 (greater than t5 by a very small amount), record Z is read for processing in the extract, and bypassed because the timestamp t5 on it is greater than the t2 maximum being processed for this run

If this is true, a subsequent incremental query will obtain the updated record - where the prior creation was not obtained when expected due to the subsequent update.

The timestamps on the information you have provided are not conclusive - the updated at timestamp from snapshot shows a Zulu timezone, and the records present in your screenshot from the Database that have an updated at time slightly later than that are without timezone - so I am not sure if the Zulu time from the extract has been shifted to a local timezone in the database.